As per Part 1 – The need here was to create a completely airgapped – i.e. no admin accounts for logging into reporting dashboards – reporting mechanism for managers across a tenant shared between 20 or more business units. The reporting was primarily to monitor licenses and how they were assigned, what usage the business unit was getting from that and other metrics which are useful to report on.

Part 1 – Using Power Platform and Graph for Office 365 Reporting – Users

Part 2 – Using Power Platform and Graph for Office 365 Reporting – License Assignments

Part 3 -Using Power Platform and Graph for Office 365 Reporting – Data Cleansing

Part 4 – Using Power Platform and Graph for Office 365 Reporting – Summary and History

As per part 3 I don’t want to save the entire license history of my tenant in here as it would quickly grow enormous. I do however want to save some daily totals. In this particular example the tenant was used by multiple businesses who want to be able to slice that data to show only their user information. The target then is to calculate and store a total number of each license we have against each users email domain. Obviously the same technique can be used to record on any other user attribute.

In SQL terms we’d be looking at a join followed by a a group by, it would take about 5 minutes to write and 10 minutes to run. In Flow this is “somewhat” more complicated, so much so this is likely to be the longest post I ever make.

Data Entity

First off we need somewhere to keep our data, so a new entity. Its fairly simple, so we just go to make.powerapps.com then Data | Entities and hit +New Entity to create a entity called “M365_Reporting_LicenseAggregate” with a primary field “M365_Reporting_LAgg_DomainSKU”. We just need 4 other fields in here:

| M365_Reporting_LAgg_DataTime | Date and Time | |

| M365_Reporting_LAgg_Count | Text | 100 |

| M365_Reporting_LAgg_Domain | Text | 100 |

| M365_Reporting_LAgg_SKU | Text | 100 |

Flow

Okay. Right. Deep Breath. In this flow we need to:

- Get the Users and Licenses data from CDS

- Combine them so we can see what user has what license (JOIN)

- Count the number of each unique license sku for each unique UPN domain (GROUP BY, COUNT)

- Add the data to the new entity.

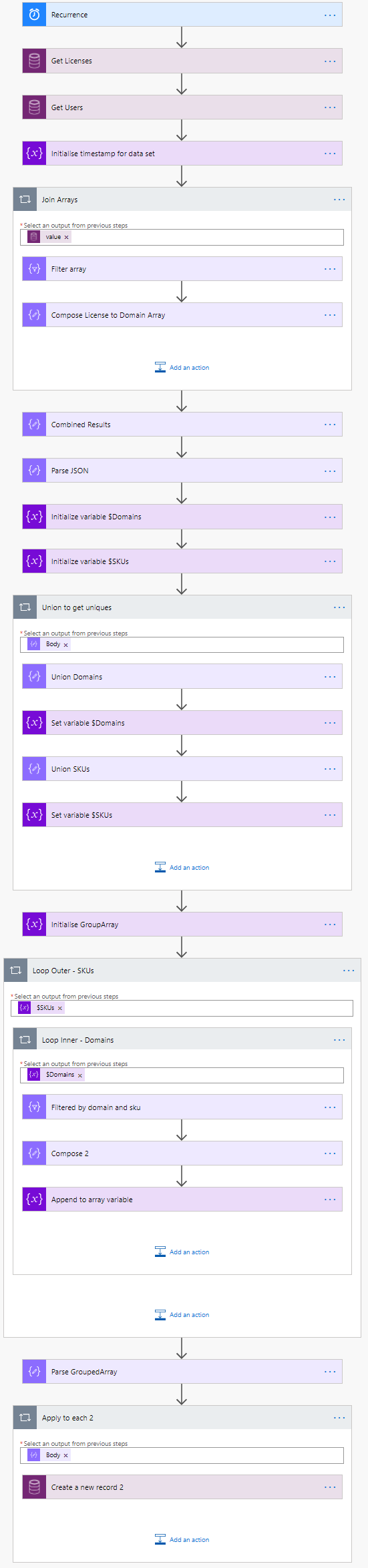

If you have never tried any of this in flow your are almost certainly thinking it sounds simple. Here’s the working flow…

Yeah. I’m going to Break it down by operation…

a – Get Data

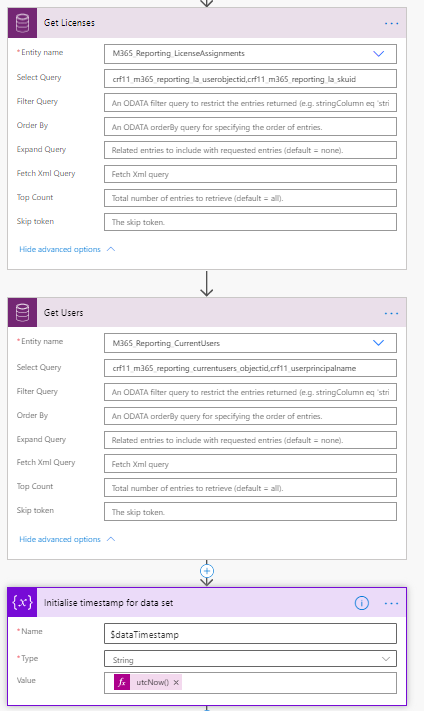

The first task (after the recurrence trigger, this flow I run weekly on Fridays) is to get the data from the common data service entities. This is a simple pair of tasks as below:

You’ll see that Ive used a select query to return only the data we actually need to build a list of licenses against user names – User ID and SKU ID from the license mapping entity and User ID and UserPrincipalName from the Users entity.

This Flow takes a LONG time to run so I’ve also grabbed the time that the data was queried and stuck it in a variable called $dataTimestamp.

b – Join Data

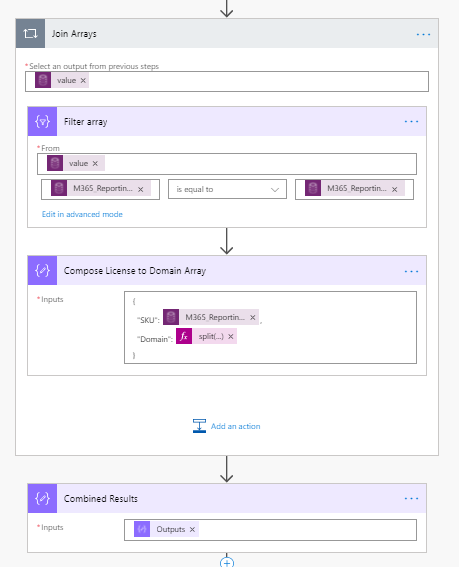

Our first data manipulation is to join the two arrays of data we just queried. We do this by stepping through the values of one array and using it to filter the other then composing a new JSON table of all the results. It looks like this:

We create a For Each task based on the values of the “Get licenses” CDS task above: @{outputs(‘Get_Licenses’)?[‘body/value’]}

We then create a Filter Array data operation using the values from the “Get users” CDS task – @outputs(‘Get_Users’)?[‘body/value’] for our “From:” parameter and filtering for items Where the User Object Id matches: @equals(item()?[‘crf11_m365_reporting_currentusers_objectid’], items(‘Join_Arrays’)?[‘crf11_m365_reporting_la_userobjectid’]

We can then use this filtered array to compose a new string to build a JSON array.

{

“SKU”: @{items(‘Join_Arrays’)?[‘crf11_m365_reporting_la_skuid’]},

“Domain”: @{split(first(body(‘Filter_array’))?[‘crf11_userprincipalname’], ‘@’)[1]}

}

The SKU is the SKU Id from the current loop item. To get the domain we use userprincipalname from all matching users for that skuid from the array filter operation, split it at the “@” symbol using split() and return the second string from the split operation [1].

Outside the loop we create a Compose action called Combined Results which takes all of the outputs from the in-loop compose and concatenates them into one nice big JSON – @{outputs(‘Compose_License_to_Domain_Array’)}

c – Group Data by Domain

Congratulations on making it this far. You may have looked through the above way of doing a JOIN and thought “that’s a bit of a cludge right there”. You aren’t wrong but you ain’t seen nothing yet, now we are going to do a GROUP BY.

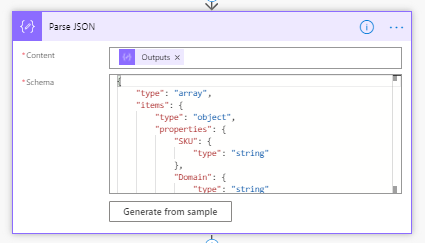

First off we need to take that massive JSON string we created in the “Combined Results” compose action to join our arrays and parse it into a new array:

The schema here is simple, we just have two properties and they cant be nulled:

{

"type": "array",

"items": {

"type": "object",

"properties": {

"SKU": {

"type": "string"

},

"Domain": {

"type": "string"

}

},

"required": [

"SKU",

"Domain"

]

}

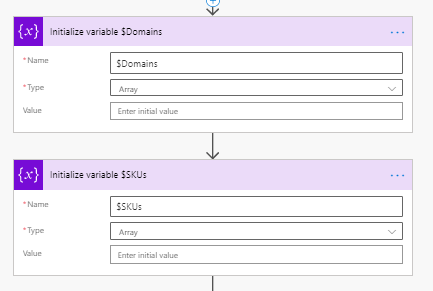

}Next thing we need is a every unique value for Domain from our joined array and every unique value for SKU ID. To do this we are going to create two new array variables, $Domains and $SKUs:

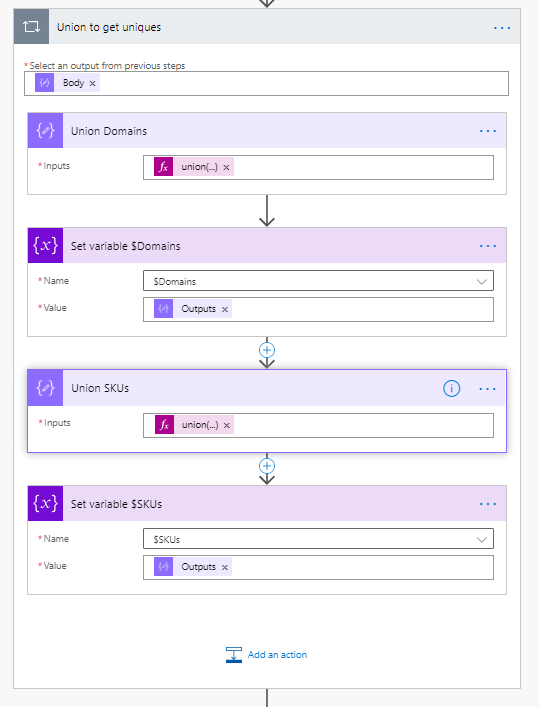

Now we run through the whole array of licenses and domains performing union of the Domains and SKUs with these two new arrays each loop, UNION will add any new unique value into the array.

To achieve this we run a Apply to Each against the body from our JSON Parse then uSUe a Compose action to do a union of the Domain for the current item with our $domains array:

@union(variables('$Domains'),createArray(items('Union_to_get_uniques')['Domain']))Then we simply set the $Domain variable to the value of the union so the list is cumulative the repeat exactly the same thing for SKUs.

@union(variables('$SKUs'),createArray(items('Union_to_get_uniques')['SKU']))Some may note that my big take away from the last part was “never update a variable in a loop” as it breaks when you run the loop with concurrency. In this case I’m only ever adding to the variable so it’s fine, I hereby update the rule to “Never update a variable in a loop unless it’s fine” :).

The first loop looks like this:

We now have 3 arrays – one with every license SKU assignment and the domain it was assigned to and two with every unique value for SKU and Domain.

Getting our Grouped and aggregated data is one more loop.

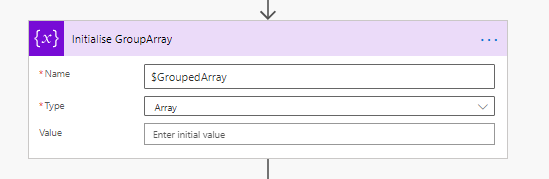

First we created a new array variable called $GroupedArray.

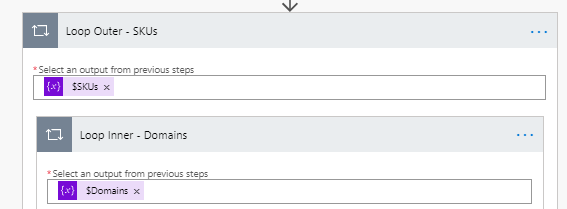

Then we open a Apply to Each Loop for each value of $SKUs, then an inner loop for each value of $Domains.

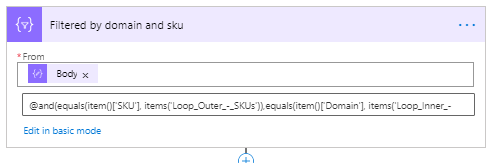

In this nested loop we perform 3 actions. First we do a filter array filtering the body of the Parse JSON action (which contains our licenseSKU/Domain array) for every value where the domain and sku equal the current loop values:

The code view looks like:

{

"inputs": {

"from": "@body('Parse_JSON')",

"where": "@and(equals(item()['SKU'], items('Loop_Outer_-_SKUs')),equals(item()['Domain'], items('Loop_Inner_-_Domains')))"

}

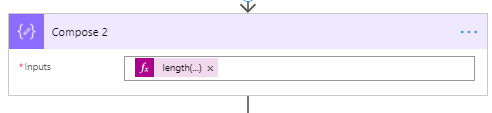

}We then use a Compose to get the number of hits from this Filter Action:

That’s @length(body(‘Filtered_by_domain_and_sku’))

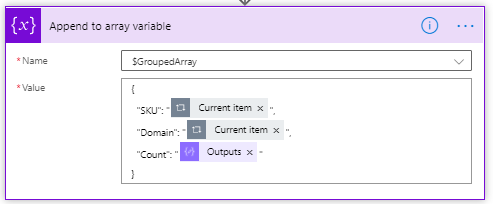

Then we use an Append to Array variable task to stuff the current SKU, current Domain and number of hits onto the end of $GroupedArray:

So there we go, we now have an array grouped by SKU, Domain and Count. Easy.

d – Load Data into CDS

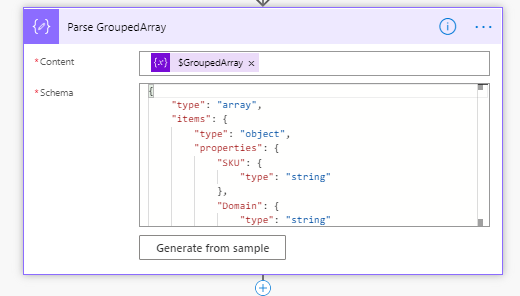

Now we have the simple task of Loading the data into our entity. First I parse the JSON in the array variable $GroupedArray (just to make it visible in the dynamic content box in all honesty)

The schema again is simple as we just created the JSON in the loop:

{

"type": "array",

"items": {

"type": "object",

"properties": {

"SKU": {

"type": "string"

},

"Domain": {

"type": "string"

},

"Count": {

"type": "string"

}

},

"required": [

"SKU",

"Domain",

"Count"

]

}

}Then we push the Parsed content into the Entity creating a new record for each item:

Notes

This works.

Using this method I have a pretty much always up (at least as long as the Power Platform doesn’t go down), fully cloud only,platform to run reports against. No database maintenance, no servers, no task scheduler or CRON jobs . Permissions to data sets are AAD integrated, no domain user accounts.

Once this data goes to PowerBI combined with other mechanism (Usage reporting, log analytics queries, other graph queries, Ill post them as I do them) it will give me some nice reports to share that are much more customisable than the standard reports so I can target data right at the people I need to see it.

The big question though is – is Power Platform the right solution? I could have built this with Azure Functions, PowerShell and SQL much easier with a much lower run time and surfaced the data into PowerBI. I would also have a far easier time if I was called in to support or fix that solution than someone else’s Power Platform version.

So I dont know. It was worth doing just to start thinking about what Flow or Power Automate might be able to do.

![]()